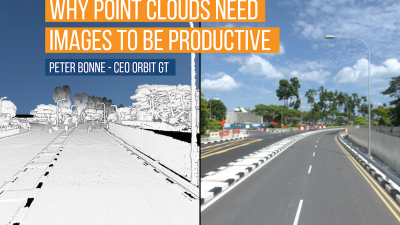

3D Mapping content is by default extremely large and complex. If we talk about Mobile Mapping, one easily collects a terabyte each day, per vehicle. A medium city easily ends up with tens of terabytes of data, probably a combination of imagery and point clouds. It’s a bit more modest when flying UAV’s, scanning indoor or do terrestrial work. But also their sensors get better, create denser result sets, representing reality in a more detailed fashion. Data volume is likely to grow further with every new sensor on the market.

Now, this is very cool for the engineer/specialist – eat your heart out ! But for the majority of the City, DoT or Telco administration, processes, and workflows, this massive data is a nightmare. Traditionally, big chunks of data are chopped up in tiles, with a horrible UI on top to figure out which tile you need for your job – waisting time and money to set this up, manage it and get it to work.

NOT ANY MORE. The common solution up to now was to cut up the data in pieces, leaving the user to browse through an extensive catalog before even getting started. Wow, that’s so 90s. Aren’t you tired of that ? There are now means to abstract the search in thousands of files and provide access to massive data with a simple user interface, nicely integrated into your workflow. As a user, you can’t see any tiling, it’s just one smooth and seamless dataset. So, how does it work ?

To make this work, we need to optimize our data storage. Many of such techniques are not new, but their implementation on our massive volume 3D Mapping data is more recent. We’ve seen multiresolution & compression tools for ortho-imagery gain momentum in the late ’90s. We’ve seen Tiled Map Services popup in the first decade of years 2000. We’ve seen the rise of smart algorithms to organize and search for data such as a quad-tree and its alikes. We’ve seen more and more open data formats appear, not all of which have been successful, unfortunately. But in the end, we need a solution, not just technology.

So here’s the answer.

1. First of all, any data needs to be prepped in such a way that it becomes easily accessible, meaning easily searchable and high-performance access and visualization. Whereas data size was important in the ’90s, I do no longer believe this is relevant today. Performance is key.

2. Secondly, flexible tools must allow to share the massive content to as many users as possible or desired. No one wants to copy terabytes of data on a hard disc and connect them to each PC in the office or transfer that to customers. This approach is the seed of usage limitation, hence the opposite of workflow integration.

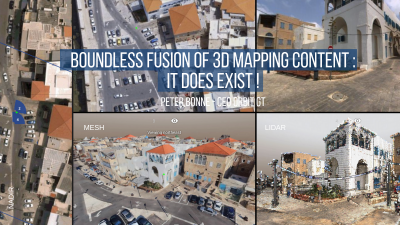

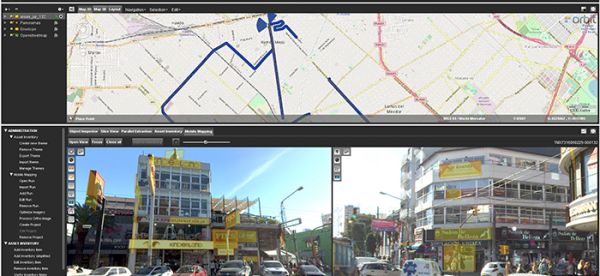

3. Third and maybe most important : a simple user interface. Simple so you don’t need training to use it. And still access complex 3D data ? Yes. Sure. Blend and fuse imagery for a variety of sources, fuse point clouds, meshes and vector data all in one. Yes. The user doesn’t care how hard the production of data was, it just needs to work for him/her. Aerial, Oblique, UAV, Street level, Indoor, Terrestrial, GIS/CAD Vector, Meshes, Models, City Models, who cares ? If you want to convince the end user to use such data, it’s going to be with 1 UI – 1 size fits all type of solution. Black boxes that support only 1 type of data, or worse, only from 1 data vendor, are totally obsolete.

4. And last but not least : Embed. Don’t let your 3D Mapping content linger on some website, bring it on in your own workflow. Make sure it’s just another window in the software you use for your day-to-day operations.

Bringing complex 3D data into your workflow should be the standard today. There are no reasons why it shouldn’t – all answers and technologies are available, up and running.

This is why we do what we do at Orbit GT. Go get it !

La Matanza, Argentina, optimizes public advertising using Mobile Mapping

Read More